- VRSight: An AI-driven Scene Description System to Improve Virtual Reality Accessibility for Blind PeopleUIST 2025Daniel Killough, Justin Feng, Zheng Xue Ching, Daniel Wang, Rithvik Dyava, Yapeng Tian, and Yuhang Zhao

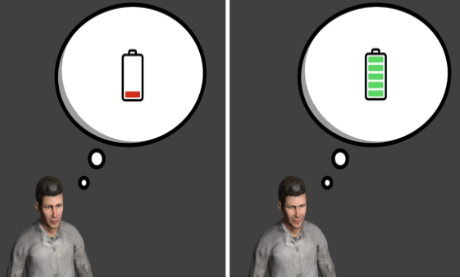

Virtual Reality (VR) is inaccessible to blind people. While research has investigated many techniques to enhance VR accessibility, they require additional developer effort to integrate. As such, most mainstream VR apps remain inaccessible as the industry de-prioritizes accessibility. We present VRSight, an end-to-end system that recognizes VR scenes post hoc through a set of AI models (e.g., object detection, depth estimation, LLM-based atmosphere interpretation) and generates tone-based, spatial audio feedback, empowering blind users to interact in VR without developer intervention. To enable virtual element detection, we further contribute DISCOVR, a VR dataset consisting of 30 virtual object classes from 17 social VR apps, substituting real-world datasets that remain not applicable to VR contexts. Nine participants used VRSight to explore an off-the-shelf VR app (Rec Room), demonstrating its effectiveness in facilitating social tasks like avatar awareness and available seat identification.

-

AROMA: Mixed-Initiative AI Assistance for Non-Visual Cooking by Grounding Multimodal Information Between Reality and VideosUIST 2025Zheng Ning, Li Leyang, Daniel Killough, JooYoung Seo, Patrick Carrington, Yapeng Tian, Yuhang Zhao, Franklin Mingzhe Li, and Toby Jia-Jun Li

AROMA: Mixed-Initiative AI Assistance for Non-Visual Cooking by Grounding Multimodal Information Between Reality and VideosUIST 2025Zheng Ning, Li Leyang, Daniel Killough, JooYoung Seo, Patrick Carrington, Yapeng Tian, Yuhang Zhao, Franklin Mingzhe Li, and Toby Jia-Jun LiVideos offer rich audiovisual information that can support people in performing activities of daily living (ADLs), but they remain largely inaccessible to blind or low-vision (BLV) individuals. In cooking, BLV people often rely on non-visual cues—such as touch, taste, and smell—to navigate their environment, making it difficult to follow the predominantly audiovisual instructions found in video recipes. To address this problem, we introduce Aroma, an AI system that provides timely responses to the user based on real-time, context-aware assistance by integrating non-visual cues perceived by the user, a wearable camera feed, and video recipe content. Aroma uses a mixed-initiative approach: it responds to user requests while also proactively monitoring the video stream to offer timely alerts and guidance. This collaborative design leverages the complementary strengths of the user and AI system to align the physical environment with the video recipe, helping the user interpret their current state and make sense of the steps. We evaluated Aroma through a study with eight BLV participants and offered insights for designing interactive AI systems to support BLV individuals in performing ADLs.

-

FocusView: Understanding and Customizing Informational Video Watching Experiences for Viewers with ADHDASSETS 2025Hanxiu ‘Hazel’ Zhu, Ruijia Chen, and Yuhang Zhao

FocusView: Understanding and Customizing Informational Video Watching Experiences for Viewers with ADHDASSETS 2025Hanxiu ‘Hazel’ Zhu, Ruijia Chen, and Yuhang ZhaoWhile videos have become increasingly prevalent in delivering information across different educational and professional contexts, individuals with ADHD often face attention challenges when watching informational videos due to the dynamic, multimodal, yet potentially distracting video elements. To understand and address this critical challenge, we designed FocusView, a video customization interface that allows viewers with ADHD to customize informational videos from different aspects. We evaluated FocusView with 12 participants with ADHD and found that FocusView significantly improved the viewability of videos by reducing distractions. Through the study, we uncovered participants’ diverse perceptions of video distractions (e.g., background music as a distraction vs. stimulation boost) and their customization preferences, highlighting unique ADHD-relevant needs in designing video customization interfaces (e.g., reducing the number of options to avoid distraction caused by customization itself). We further derived design considerations for future video customization systems for the ADHD community.

-

Characterizing Collective Efforts in Content Sharing and Quality Control for ADHD-relevant Content on Video-sharing PlatformsASSETS 2025Hanxiu ‘Hazel’ Zhu, Avanthika Senthil Kumar, Sihang Zhao, Ru Wang, Xin Tong, and Yuhang Zhao

Characterizing Collective Efforts in Content Sharing and Quality Control for ADHD-relevant Content on Video-sharing PlatformsASSETS 2025Hanxiu ‘Hazel’ Zhu, Avanthika Senthil Kumar, Sihang Zhao, Ru Wang, Xin Tong, and Yuhang ZhaoVideo-sharing platforms (VSPs) have become increasingly important for individuals with ADHD to recognize symptoms, acquire knowledge, and receive support. While videos offer rich information and high engagement, they also present unique challenges, such as information quality and accessibility issues to users with ADHD. However, little work has thoroughly examined the video content quality and accessibility issues, the impact, and the control strategies in the ADHD community. We fill this gap by systematically collecting 373 ADHD-relevant videos with comments from YouTube and TikTok and analyzing the data with a mixed method. Our study identified the characteristics of ADHD-relevant videos on VSPs (e.g., creator types, video presentation forms, quality issues) and revealed the collective efforts of creators and viewers in video quality control, such as authority building, collective quality checking, and accessibility improvement. We further derive actionable design implications for VSPs to offer more reliable and ADHD-friendly content.

-

Characterizing Visual Intents for People with Low Vision through Eye TrackingASSETS 2025Ru Wang, Ruijia Chen, Anqiao Erica Cai, Zhiyuan Li, Sanbrita Mondal, and Yuhang Zhao

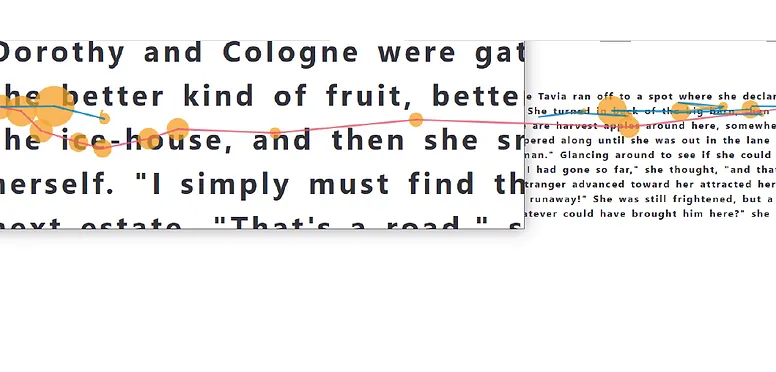

Characterizing Visual Intents for People with Low Vision through Eye TrackingASSETS 2025Ru Wang, Ruijia Chen, Anqiao Erica Cai, Zhiyuan Li, Sanbrita Mondal, and Yuhang ZhaoAccessing visual information is crucial yet challenging for people with low vision due to visual conditions like low visual acuity and limited visual fields. However, unlike blind people, low vision people have and prefer using their functional vision in daily tasks. Gaze patterns thus become an important indicator to uncover their visual challenges and intents, inspiring more adaptive visual support. We seek to deeply understand low vision users’ gaze behaviors in different image-viewing tasks, characterizing typical visual intents and the unique gaze patterns exhibited by people with different low vision conditions. We conducted a retrospective think-aloud study using eye tracking with 20 low vision participants and 20 sighted controls. Participants completed various image-viewing tasks and watched the playback of their gaze trajectories to reflect on their visual experiences. Based on the study, we derived a visual intent taxonomy with five visual intents characterized by participants’ gaze behaviors. We demonstrated the difference between low vision and sighted participants’ gaze behaviors and how visual ability affected low vision participants’ gaze patterns across visual intents. Our findings underscore the importance of combining visual ability information, visual context, and eye tracking data in visual intent recognition, setting up a foundation for intent-aware assistive technologies for low vision people.

-

“It was Mentally Painful to Try and Stop”: Design Opportunities for Just-in-Time Interventions for People with Obsessive-Compulsive Disorder in the Real WorldASSETS 2025Ru Wang, Kexin Zhang, Yuqing Wang, Keri Brown, and Yuhang Zhao

“It was Mentally Painful to Try and Stop”: Design Opportunities for Just-in-Time Interventions for People with Obsessive-Compulsive Disorder in the Real WorldASSETS 2025Ru Wang, Kexin Zhang, Yuqing Wang, Keri Brown, and Yuhang ZhaoObsessive-compulsive disorder (OCD) is a mental health condition that significantly impacts people’s quality of life. While evidence-based therapies such as exposure and response prevention (ERP) can be effective, managing OCD symptoms in everyday life—an essential part of treatment and independent living—remains challenging due to fear confrontation and lack of appropriate support. To better understand the challenges and needs in OCD self-management, we conducted interviews with 10 participants with diverse OCD conditions and seven therapists specializing in OCD treatment. Through these interviews, we explored the characteristics of participants’ triggers and how they shaped their compulsions, and uncovered key coping strategies across different stages of OCD episodes. Our findings highlight critical gaps between OCD self-management needs and currently available support. Building on these insights, we propose design opportunities for just-in-time self-management technologies for OCD, including personalized symptom tracking, just-in-time interventions, and support for OCD-specific privacy and social needs—through technology and beyond.

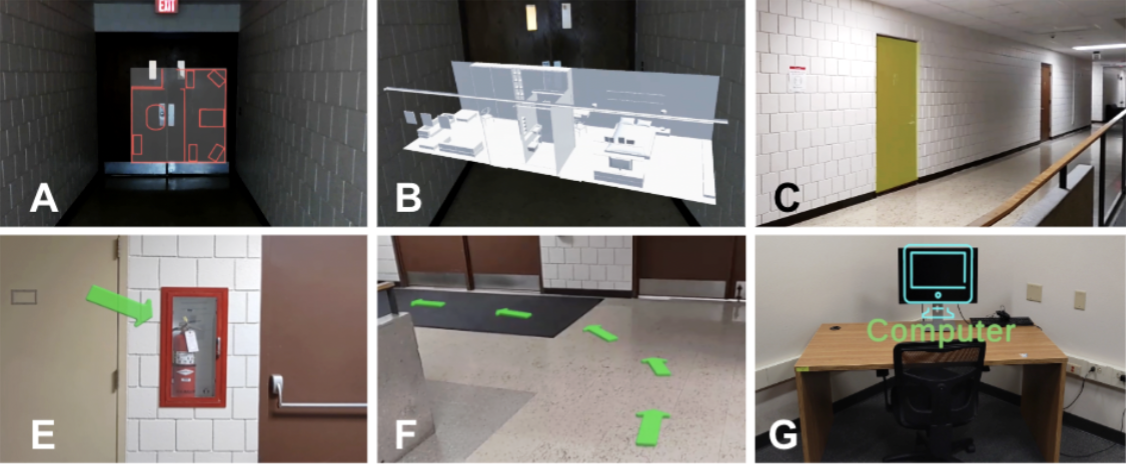

- VisiMark: Characterizing and Augmenting Landmarks for People with Low Vision in Augmented Reality to Support Indoor NavigationCHI 2025Ruijia Chen, Junru Jiang, Pragati Maheshwary, Brianna R. Cochran, and Yuhang Zhao

Landmarks are critical in navigation, supporting self-orientation and mental model development. Similar to sighted people, people with low vision (PLV) frequently look for landmarks via visual cues but face difficulties identifying some important landmarks due to vision loss. We first conducted a formative study with six PLV to characterize their challenges and strategies in landmark selection, identifying their unique landmark categories (e.g., area silhouettes, accessibility-related objects) and preferred landmark augmentations. We then designed VisiMark, an AR interface that supports landmark perception for PLV by providing both overviews of space structures and in-situ landmark augmentations. We evaluated VisiMark with 16 PLV and found that VisiMark enabled PLV to perceive landmarks they preferred but could not easily perceive before, and changed PLV’s landmark selection from only visually-salient objects to cognitive landmarks that are more important and meaningful. We further derive design considerations for AR-based landmark augmentation systems for PLV.

-

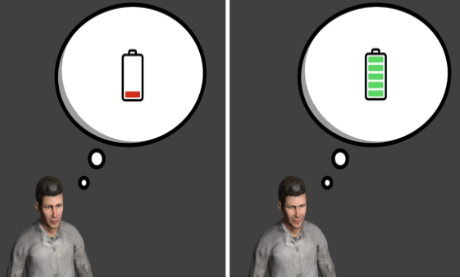

Inclusive Avatar Guidelines for People with Disabilities: Supporting Disability Representation in Social Virtual RealityCHI 2025Kexin Zhang, Edward Glenn Scott Spencer, Andric Li, Ang Li, Yaxing Yao, and Yuhang Zhao

Inclusive Avatar Guidelines for People with Disabilities: Supporting Disability Representation in Social Virtual RealityCHI 2025Kexin Zhang, Edward Glenn Scott Spencer, Andric Li, Ang Li, Yaxing Yao, and Yuhang ZhaoAvatar is a critical medium for identity representation in social virtual reality (VR). However, options for disability expression are highly limited on current avatar interfaces. Improperly designed disability features may even perpetuate misconceptions about people with disabilities (PWD). As more PWD use social VR, there is an emerging need for comprehensive design standards that guide developers and designers to create inclusive avatars. Our work aim to advance the avatar design practices by delivering a set of centralized, comprehensive, and validated design guidelines that are easy to adopt, disseminate, and update. Through a systematic literature review and interview with 60 participants with various disabilities, we derived 20 initial design guidelines that cover diverse disability expression methods through five aspects, including avatar appearance, body dynamics, assistive technology design, peripherals around avatars, and customization control. We further evaluated the guidelines via a heuristic evaluation study with 10 VR practitioners, validating the guideline coverage, applicability, and actionability. Our evaluation resulted in a final set of 17 design guidelines with recommendation levels.

-

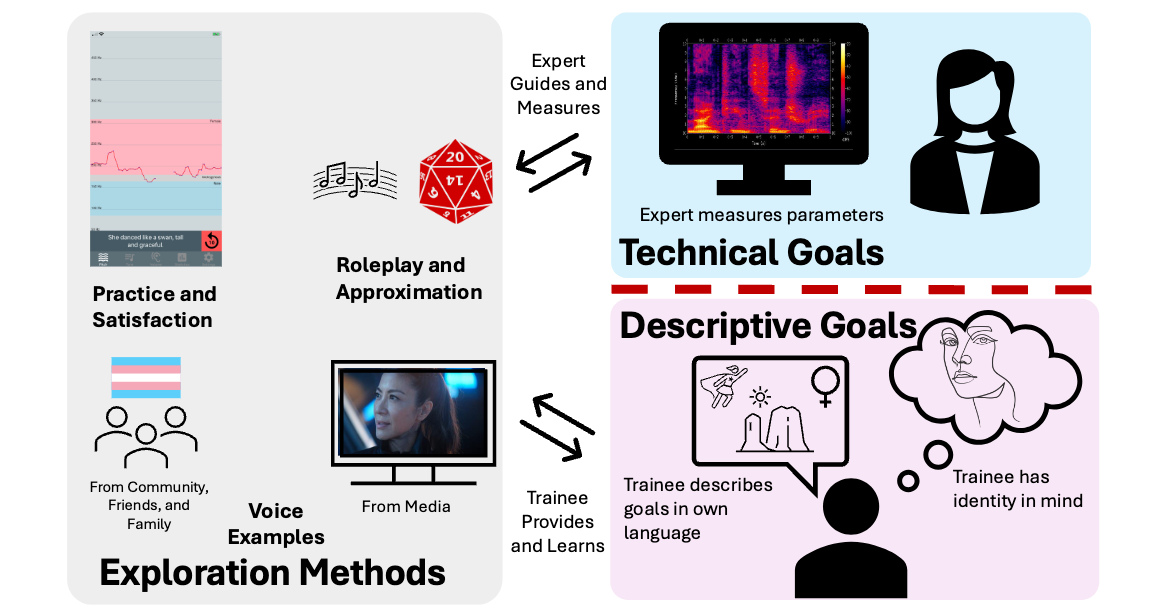

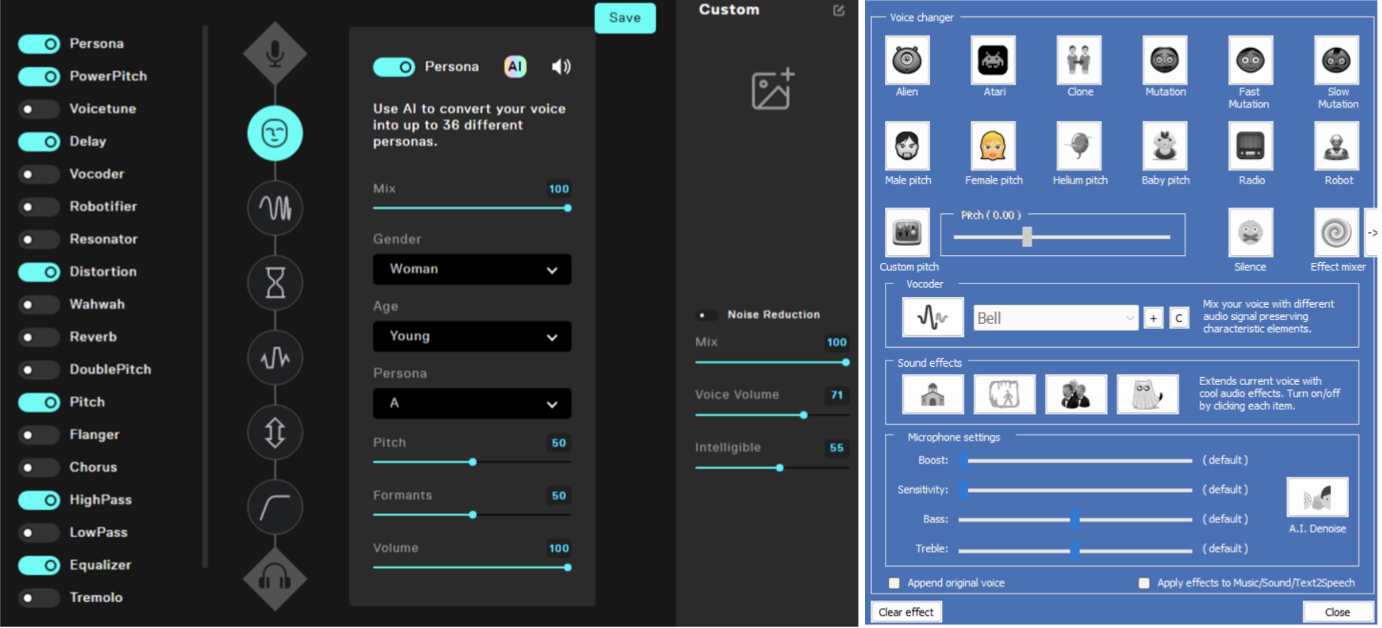

Beyond the "Industry Standard": Focusing Gender-Affirming Voice Training Technologies on Individualized Goal ExplorationCHI 2025 Best Paper Honorable MentionKassie Povinelli, Hanxiu "Hazel" Zhu, and Yuhang Zhao

Beyond the "Industry Standard": Focusing Gender-Affirming Voice Training Technologies on Individualized Goal ExplorationCHI 2025 Best Paper Honorable MentionKassie Povinelli, Hanxiu "Hazel" Zhu, and Yuhang ZhaoBest Paper Honorable Mention

Gender-affirming voice training is critical for the transition process for many transgender individuals, enabling their voice to align with their gender identity. Individualized voice goals guide and motivate the voice training journey, but existing voice training technologies fail to define clear goals. We interviewed six voice experts and ten transgender individuals with voice training experience (voice trainees), focusing on how they defined, triangulated, and used voice goals. We found that goal voice exploration involves navigation between approximate and clear goals, and continuous reevaluation throughout the voice training journey. Our study reveals how voice examples, character descriptions, and voice modification and training technologies inform goal exploration, and identifies risks of overemphasizing goals. We identified technological implications informed by the separation of voice goals and targets, and provide guidelines for for supporting individualized goals throughout the voice training journey based on brainstorming with trainees and experts.

-

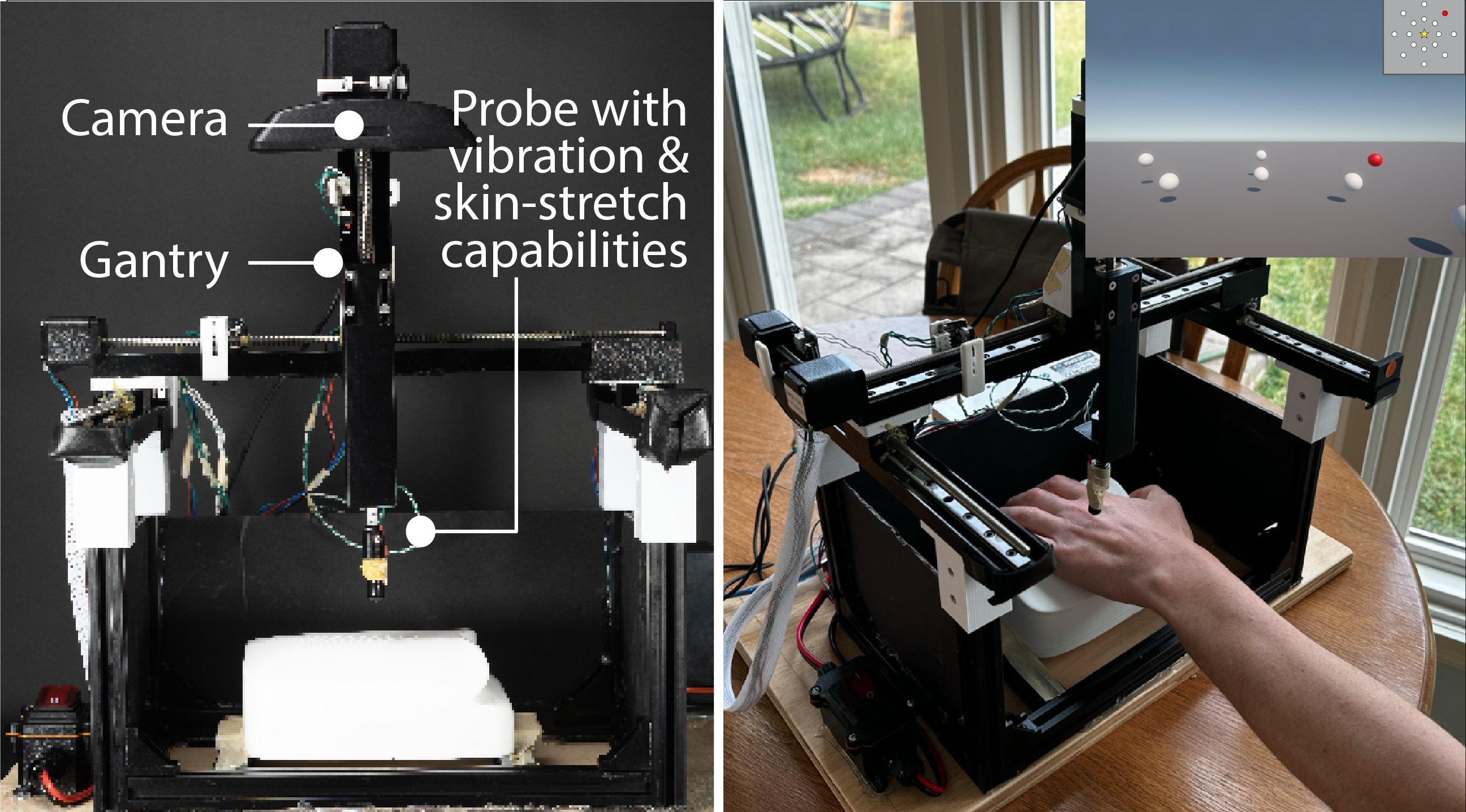

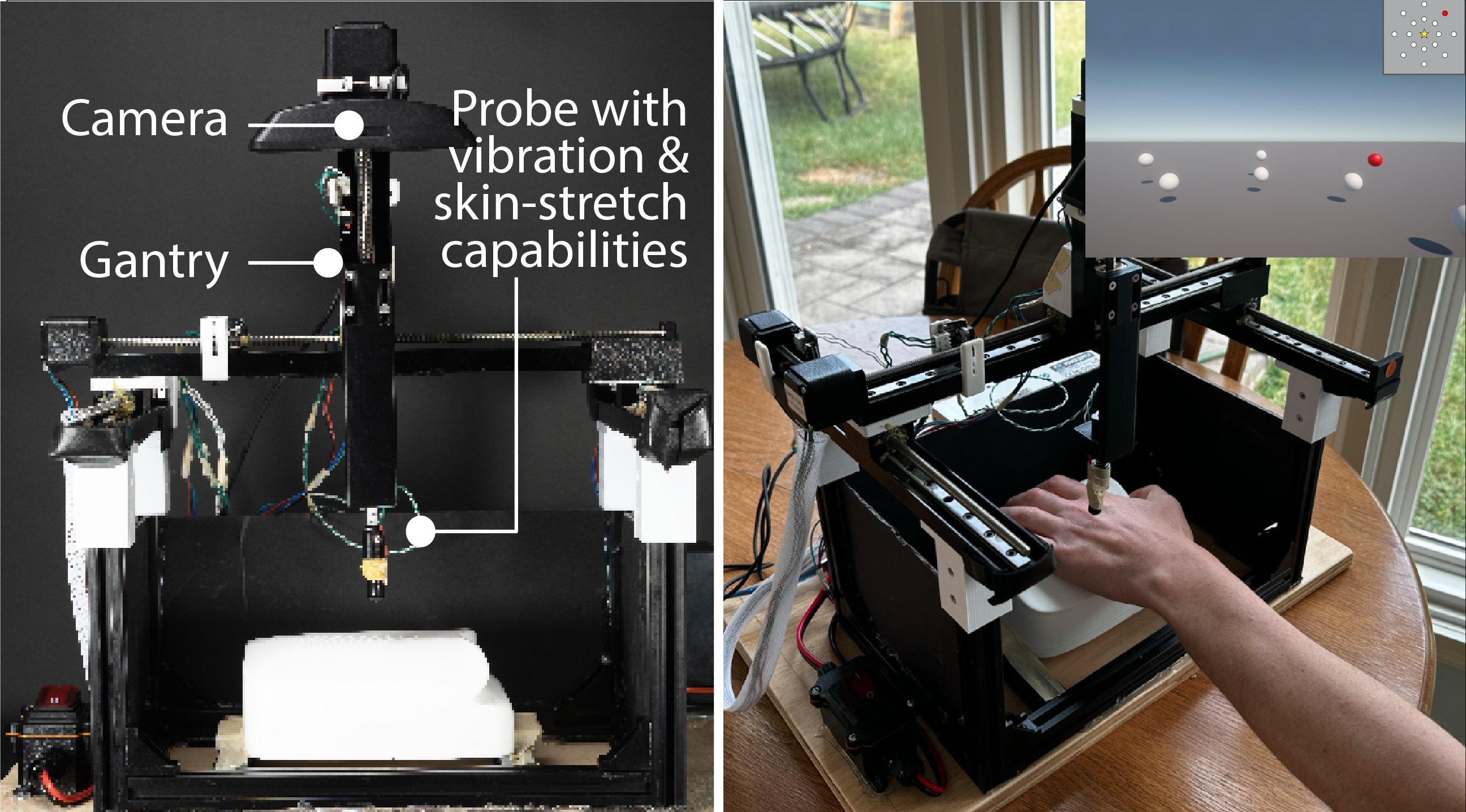

Comparing Vibrotactile and Skin-Stretch Haptic Feedback for Conveying Spatial Information of Virtual Objects to Blind VR UsersIEEE VR 2025Jiangsheng Li, Zining Zhang, Zeyu Yan, Yuhang Zhao, and Huaishu Peng

Comparing Vibrotactile and Skin-Stretch Haptic Feedback for Conveying Spatial Information of Virtual Objects to Blind VR UsersIEEE VR 2025Jiangsheng Li, Zining Zhang, Zeyu Yan, Yuhang Zhao, and Huaishu PengPerceiving spatial information of a virtual object (e.g., direction, distance) is critical yet challenging for blind users seeking an immersive virtual reality (VR) experience. To facilitate VR accessibility for blind users, in this paper, we investigate the effectiveness of two types of haptic cues—vibrotactile and skin-stretch cues—in conveying the spatial information of a virtual object when applied to the dorsal side of a blind user’s hand. We conducted a user study with 10 blind users to investigate how they perceive static and moving objects in VR with a custom-made haptic apparatus. Our results reveal that blind users can more accurately understand an object’s location and movement when receiving skin-stretch cues, as opposed to vibrotactile cues. We discuss the pros and cons of both types of haptic cues and conclude with design recommendations for future haptic solutions for VR accessibility.

- CookAR: Affordance Augmentations in Wearable AR to Support Kitchen Tool Interactions for People with Low VisionUIST 2024 Belonging and Inclusion Best Paper AwardJaewook Lee, Andrew D Tjahjadi, Jiho Kim, Junpu Yu, Minji Park, Jiawen Zhang, Jon E. Froehlich, Yapeng Tian, and Yuhang Zhao

Belonging and Inclusion Best Paper Award

Cooking is a central activity of daily living, supporting independence as well as mental and physical health. However, prior work has highlighted key barriers for people with low vision (LV) to cook, particularly around safely interacting with tools, such as sharp knives or hot pans. Drawing on recent advancements in computer vision (CV), we present CookAR, a head-mounted AR system with real-time object affordance augmentations to support safe and efficient interactions with kitchen tools. To design and implement CookAR, we collected and annotated the first egocentric dataset of kitchen tool affordances, fine-tuned an affordance segmentation model, and developed an AR system with a stereo camera to generate visual augmentations. To validate CookAR, we conducted a technical evaluation of our fine-tuned model as well as a qualitative lab study with 10 LV participants for suitable augmentation design. Our technical evaluation demonstrates that our model outperforms the baseline on our tool affordance dataset, while our user study indicates a preference for affordance augmentations over the traditional whole object augmentations.

-

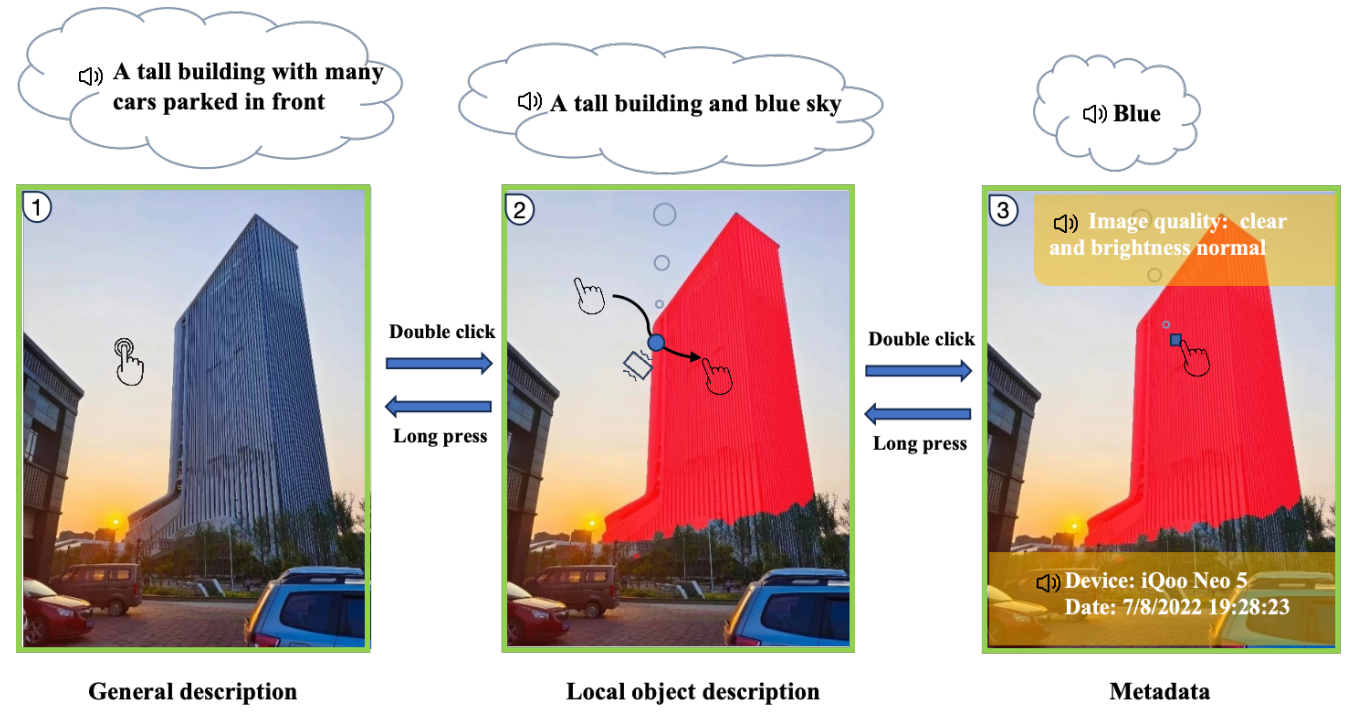

AI-Vision: A Three-Layer Accessible Image Exploration System For People with Visual Impairments in ChinaIMWUT 2024Kaixing Zhao, Rui Lai, Bin Guo, Le Liu, Liang He, and Yuhang Zhao

AI-Vision: A Three-Layer Accessible Image Exploration System For People with Visual Impairments in ChinaIMWUT 2024Kaixing Zhao, Rui Lai, Bin Guo, Le Liu, Liang He, and Yuhang ZhaoAccessible image exploration systems are able to help people with visual impairments (PVI) to understand image content by providing different types of interactions. With the development of computer vision technologies, image exploration systems are supporting more fine-grained image content processing, including image segmentation, description and object recognition. However, in developing countries like China, it is still rare for PVI to widely rely on such accessible system. To better understand the usage situation of accessible image exploration system in China and improve the image understanding of PVI in China, we developed AI-Vision, an Android based hierarchical accessible image exploration system supporting the generations of image general description, local object description and metadata information. Our 7-day diary study with 10 PVI verified the usability of AI-Vision and also revealed a series of design implications for improving accessible image exploration systems similar to AI-Vision.

-

"This Really Lets Us See the Entire World:" Designing a Conversational Telepresence Robot for Homebound Older AdultsDIS 2024Yaxin Hu, Laura Stegner, Yasmine Kotturi, Caroline Zhang, Yi-Hao Peng, Faria Huq, Yuhang Zhao, Jeffrey P Bigham, and Bilge Mutlu

"This Really Lets Us See the Entire World:" Designing a Conversational Telepresence Robot for Homebound Older AdultsDIS 2024Yaxin Hu, Laura Stegner, Yasmine Kotturi, Caroline Zhang, Yi-Hao Peng, Faria Huq, Yuhang Zhao, Jeffrey P Bigham, and Bilge MutluIn this paper, we explore the design and use of conversational telepresence robots to help homebound older adults interact with the external world. An initial needfinding study (N=8) using video vignettes revealed older adults’ experiential needs for robot-mediated remote experiences such as exploration, reminiscence and social participation. We then designed a prototype system to support these goals and conducted a technology probe study (N=11) to garner a deeper understanding of user preferences for remote experiences. The study revealed user interactive patterns in each desired experience, highlighting the need of robot guidance, social engagements with the robot and the remote bystanders. Our work identifies a novel design space where conversational telepresence robots can be used to foster meaningful interactions in the remote physical environment. We offer design insights into the robot’s proactive role in providing guidance and using dialogue to create personalized, contextualized and meaningful experiences.

- GazePrompt: Enhancing Low Vision People’s Reading Experience with Gaze-Aware AugmentationsCHI 2024Ru Wang, Zach Potter, Yun Ho, Daniel Killough, Linda Zeng, Sanbrita Mondal, and Yuhang Zhao

Reading is a challenging task for low vision people. While conventional low vision aids (e.g., magnification) offer certain support, they cannot fully address the difficulties faced by low vision users, such as locating the next line and distinguishing similar words. To fill this gap, we present GazePrompt, a gaze-aware reading aid that provides timely and targeted visual and audio augmentations based on users’ gaze behaviors. GazePrompt includes two key features: (1) a Line-Switching support that highlights the line a reader intends to read; and (2) a Difficult-Word support that magnifies or reads aloud a word that the reader hesitates with. Through a study with 13 low vision participants who performed well-controlled reading-aloud tasks with and without GazePrompt, we found that GazePrompt significantly reduced participants’ line switching time, reduced word recognition errors, and improved their subjective reading experiences. A follow-up silent-reading study showed that GazePrompt can enhance users’ concentration and perceived comprehension of the reading contents. We further derive design considerations for future gaze-based low vision aids.

-

Exploring the Design Space of Optical See-through AR Head-Mounted Displays to Support First Responders in the FieldCHI 2024Kexin Zhang, Brianna R Cochran, Ruijia Chen, Lance Hargung, Bryce Sprecher, Ross Tredinnick, Kevin Ponto, Suman Banerjee, and Yuhang Zhao

Exploring the Design Space of Optical See-through AR Head-Mounted Displays to Support First Responders in the FieldCHI 2024Kexin Zhang, Brianna R Cochran, Ruijia Chen, Lance Hargung, Bryce Sprecher, Ross Tredinnick, Kevin Ponto, Suman Banerjee, and Yuhang ZhaoFirst responders navigate hazardous, unfamiliar environments in the field (e.g., mass-casualty incidents), making life-changing decisions in a split second. %First responders perform dangerous and time sensitive tasks in \changemass-casualty incidents hazardous, unfamiliar environments, making life-changing decisions in a split second. AR head-mounted displays (HMDs) have shown promise in supporting them due to its capability of recognizing and augmenting the challenging environments in a hands-free manner. However, the design spaces have not been thoroughly explored by involving various first responders. We interviewed 26 first responders in the field who experienced a state-of-the-art optical-see-through AR HMD, as well as its interaction techniques and four types of AR cues (i.e., overview cues, directional cues, highlighting cues, and labeling cues), soliciting their first-hand experiences, design ideas, and concerns. Our study revealed different first responders’ unique preferences and needs for AR cues and interactions, and identified desired AR designs tailored to urgent, risky scenarios (e.g., affordance augmentation to facilitate fast and safe action). While acknowledging the value of AR HMDs, concerns were also raised around trust, privacy, and proper integration with other equipment.

-

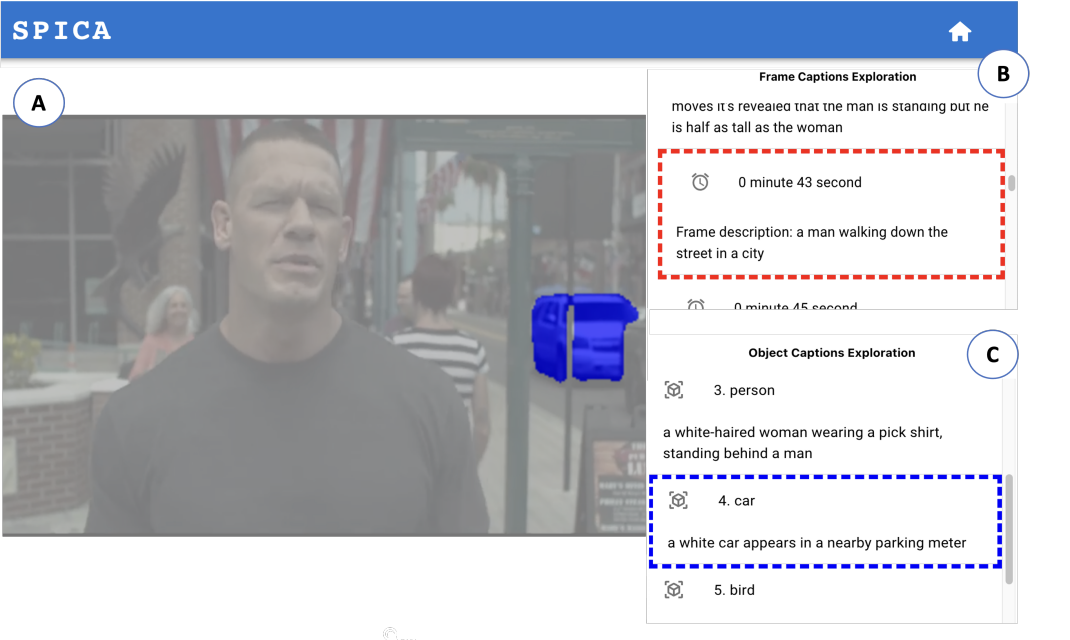

SPICA: Interactive Video Content Exploration through Augmented Audio Descriptions for Blind or Low-Vision ViewersCHI 2024Zheng Ning, Brianna L Wimer, Kaiwen Jiang, Jerrick Ban, Yapeng Tian, Yuhang Zhao, and Toby Jia-Jun Li

SPICA: Interactive Video Content Exploration through Augmented Audio Descriptions for Blind or Low-Vision ViewersCHI 2024Zheng Ning, Brianna L Wimer, Kaiwen Jiang, Jerrick Ban, Yapeng Tian, Yuhang Zhao, and Toby Jia-Jun LiBlind or Low-Vision (BLV) users often rely on audio descriptions (AD) to access video content. However, conventional static ADs can leave out detailed information in videos, impose a high mental load, neglect the diverse needs and preferences of BLV users, and lack immersion. To tackle these challenges, we introduce SPICA, an AI-powered system that enables BLV users to interactively explore video content. Informed by prior empirical studies on BLV video consumption, SPICA offers novel interactive mechanisms for supporting temporal navigation of frame captions and spatial exploration of objects within key frames. Leveraging an audio-visual machine learning pipeline, SPICA augments existing ADs by adding interactivity, spatial sound effects, and individual object descriptions without requiring additional human annotation. Through a user study with 14 BLV participants, we evaluated the usability and usefulness of SPICA and explored user behaviors, preferences, and mental models when interacting with augmented ADs.

-

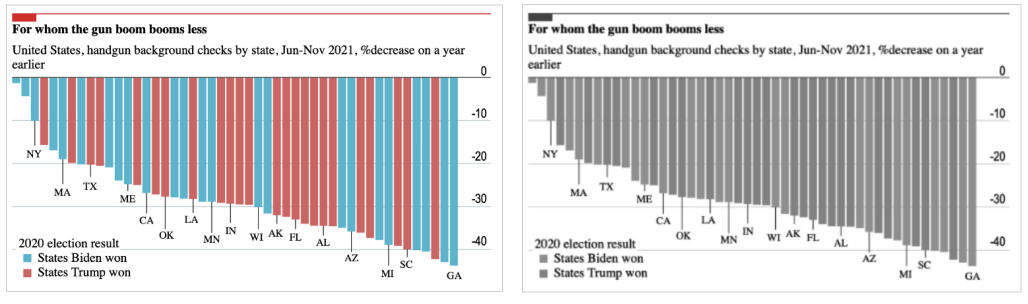

How Do Low-Vision Individuals Experience Information Visualization?CHI 2024Yanan Wang, Yuhang Zhao, and Yea-Seul Kim

How Do Low-Vision Individuals Experience Information Visualization?CHI 2024Yanan Wang, Yuhang Zhao, and Yea-Seul KimIn recent years, there has been a growing interest in enhancing the accessibility of visualizations for people with visual impairments. While much of the research has focused on improving accessibility for screen reader users, the specific needs of people with remaining vision (i.e., low-vision individuals) have been largely unaddressed. To bridge this gap, we conducted a qualitative study that provides insights into how low-vision individuals experience visualizations. We found that participants utilized various strategies to examine visualizations using the screen magnifiers and also observed that the default zoom level participants use for general purposes may not be optimal for reading visualizations. We identified that participants relied on their prior knowledge and memory to minimize the traversing cost when examining visualization. Based on the findings, we motivate a personalized tool to accommodate varying visual conditions of low-vision individuals and derive the design goals and features of the tool.

- StarRescue: the Design and Evaluation of A Turn-Taking Collaborative Game for Facilitating Autistic Children’s Social SkillsCHI 2024Rongqi Bei, Yajie Liu, Yihe Wang, Yuxuan Huang, Ming Li, Yuhang Zhao, and Xin Tong

Autism Spectrum Disorder (ASD) presents challenges in social interaction skill development, particularly in turn-taking. Digital interventions offer potential solutions for improving autistic children’s social skills but often lack addressing specific collaboration techniques. Therefore, we designed a prototype of a turn-taking collaborative tablet game, StarRescue, which encourages children’s distinct collaborative roles and interdependence while progressively enhancing sharing and mutual planning skills. We further conducted a controlled study with 32 autistic children to evaluate StarRescue’s usability and potential effectiveness in improving their social skills. Findings indicated that StarRescue has great potential to foster turn-taking skills and social communication skills and also extended beyond the game. Additionally, we discussed implications for future work, such as including parents as game spectators and understanding autistic children’s territory awareness in collaboration. Our study contributes a promising digital intervention for autistic children’s turn-taking social skill development via a scaffolding approach and valuable design implications for future research.

-

Springboard, Roadblock or "Crutch"?: How Transgender Users Leverage Voice Changers for Gender Presentation in Social Virtual RealityIEEE VR 2024Kassie Povinelli and Yuhang Zhao

Springboard, Roadblock or "Crutch"?: How Transgender Users Leverage Voice Changers for Gender Presentation in Social Virtual RealityIEEE VR 2024Kassie Povinelli and Yuhang ZhaoSocial virtual reality (VR) serves as a vital platform for transgender individuals to explore their identities through avatars and foster personal connections within online communities. However, it presents a challenge: the disconnect between avatar embodiment and voice representation, often leading to misgendering and harassment. Prior research acknowledges this issue but overlooks the potential solution of voice changers. We interviewed 13 transgender and gender-nonconforming users of social VR platforms, focusing on their experiences with and without voice changers. We found that using a voice changer not only reduces voice-related harassment, but also allows them to experience gender euphoria through both hearing their modified voice and the reactions of others to their modified voice, motivating them to pursue voice training and medication to achieve desired voices. Furthermore, we identified the technical barriers to current voice changer technology and potential improvements to alleviate the problems that transgender and gender-nonconforming users face.

- A Multi-modal Toolkit to Support DIY Assistive Technology Creation for Blind and Low Vision PeopleUIST 2023 DemoLiwen He, Yifan Li, Mingming Fan, Liang He, and Yuhang Zhao

We design and build A11yBits, a tangible toolkit that empowers blind and low vision (BLV) people to easily create personalized do-it-yourself assistive technologies (DIY-ATs). A11yBits includes (1) a series of Sensing modules to detect both environmental information and user commands, (2) a set of Feedback modules to send multi-modal feedback, and (3) two Base modules (Sensing Base and Feedback Base) to power and connect the sensing and feedback modules. The toolkit enables accessible and easy assembly via a “plug-and-play” mechanism. BLV users can select and assemble their preferred modules to create personalized DIY-ATs.

-

A Diary Study in Social Virtual Reality: Impact of Avatars with Disability Signifiers on the Social Experiences of People with DisabilitiesASSETS 2023Kexin Zhang, Elmira Deldari, Yaxing Yao, and Yuhang Zhao

A Diary Study in Social Virtual Reality: Impact of Avatars with Disability Signifiers on the Social Experiences of People with DisabilitiesASSETS 2023Kexin Zhang, Elmira Deldari, Yaxing Yao, and Yuhang Zhao -

Practices and Barriers of Cooking Training for Blind and Low Vision PeopleASSETS 2023Ru Wang, Nihan Zhou, Tam Nguyen, Sanbrita Mondal, Bilge Mutlu, and Yuhang Zhao

Practices and Barriers of Cooking Training for Blind and Low Vision PeopleASSETS 2023Ru Wang, Nihan Zhou, Tam Nguyen, Sanbrita Mondal, Bilge Mutlu, and Yuhang ZhaoCooking is a vital yet challenging activity for blind and low vision (BLV) people, which involves many visual tasks that can be difficult and dangerous. BLV training services, such as vision rehabilitation, can effectively improve BLV people’s independence and quality of life in daily tasks, such as cooking. However, there is a lack of understanding on the practices employed by the training professionals and the barriers faced by BLV people in such training. To fill the gap, we interviewed six professionals to explore their training strategies and technology recommendations for BLV clients in cooking activities. Our findings revealed the fundamental principles, practices, and barriers in current BLV training services, identifying the gaps between training and reality.

-

Understanding How Low Vision People Read Using Eye TrackingCHI 2023Ru Wang, Linxiu Zeng, Xinyong Zhang, Sanbrita Mondal, and Yuhang Zhao

Understanding How Low Vision People Read Using Eye TrackingCHI 2023Ru Wang, Linxiu Zeng, Xinyong Zhang, Sanbrita Mondal, and Yuhang ZhaoWhile being able to read with screen magnifiers, low vision people have slow and unpleasant reading experiences.Eye tracking has the potential to improve their experience by recognizing fine-grained gaze behaviors and providing more targeted enhancements. To inspire gaze-based low vision technology, we investigate the suitable method to collect low vision users’ gaze data via commercial eye trackers and thoroughly explore their challenges in reading based on their gaze behaviors. With an improved calibration interface, we collected the gaze data of 20 low vision participants and 20 sighted controls who performed reading tasks on a computer screen; low vision participants were also asked to read with different screen magnifiers. We found that, with an accessible calibration interface and data collection method, commercial eye trackers can collect gaze data of comparable quality from low vision and sighted people. Our study identified low vision people’s unique gaze patterns during reading, building upon which, we propose design implications for gaze-based low vision technology.

-

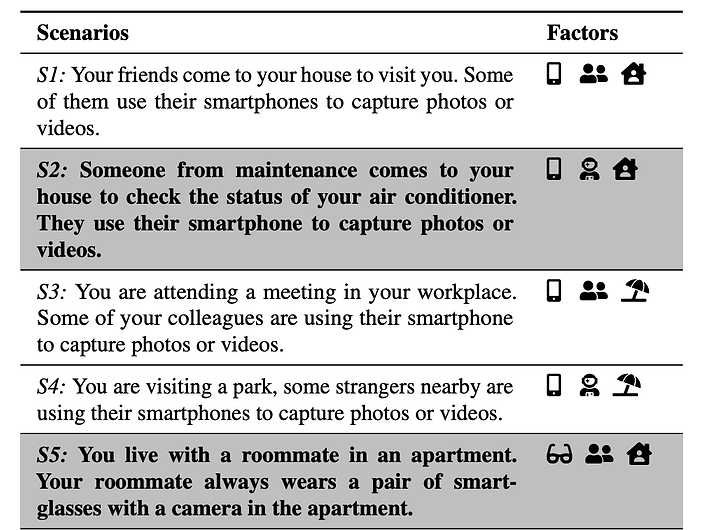

"If Sighted People Know, I Should Be Able to Know:" Privacy Perceptions of Bystanders with Visual Impairments around Camera-based TechnologyUSENIX Security 2023Yuhang Zhao, Yaxing Yao, Jiaru Fu, and Nihan Zhou

"If Sighted People Know, I Should Be Able to Know:" Privacy Perceptions of Bystanders with Visual Impairments around Camera-based TechnologyUSENIX Security 2023Yuhang Zhao, Yaxing Yao, Jiaru Fu, and Nihan ZhouCamera-based technology can be privacy-invasive, especially for bystanders who can be captured by the cameras but do not have direct control or access to the devices. The privacy threats become even more significant to bystanders with visual impairments (BVI) since they cannot visually discover the use of cameras nearby and effectively avoid being captured. While some prior research has studied visually impaired people’s privacy concerns as direct users of camera-based assistive technologies, no research has explored their unique privacy perceptions and needs as bystanders. We conducted an in-depth interview study with 16 visually impaired participants to understand BVI’s privacy concerns, expectations, and needs in different camera usage scenarios. A preliminary survey with 90 visually impaired respondents and 96 sighted controls was conducted to compare BVI and sighted bystanders’ general attitudes towards cameras and elicit camera usage scenarios for the interview study. Our research revealed BVI’s unique privacy challenges and perceptions around cameras, highlighting their needs for privacy awareness and protection. We summarized design considerations for future privacy-enhancing technologies to fulfill BVI’s privacy needs.

- VRBubble: Enhancing Peripheral Awareness of Avatars for People with Visual Impairments in Social Virtual RealityASSETS 2022, CHI 2022 LBWTiger F Ji, Brianna Cochran, and Yuhang Zhao

Social Virtual Reality (VR) is growing for remote socializing and collaboration. However, current social VR applications are not accessible to people with visual impairments (PVI) due to their focus on visual experiences. We aim to facilitate social VR accessibility by enhancing PVI’s peripheral awareness of surrounding avatar dynamics. We designed VRBubble, an audio-based VR technique that provides surrounding avatar information based on social distances. Based on Hall’s proxemic theory, VRBubble divides the social space with three Bubbles—Intimate, Conversation, and Social Bubble—generating spatial audio feedback to distinguish avatars in different bubbles and provide suitable avatar information. We provide three audio alternatives: earcons, verbal notifications, and real-world sound effects. PVI can select and combine their preferred feedback alternatives for different avatars, bubbles, and social contexts.

-

“It’s Just Part of Me:” Understanding Avatar Diversity and Self-presentation of People with Disabilities in Social Virtual RealityASSETS 2022Kexin Zhang, Elmira Deldari, Zhicong Lu, Yaxing Yao, and Yuhang Zhao

“It’s Just Part of Me:” Understanding Avatar Diversity and Self-presentation of People with Disabilities in Social Virtual RealityASSETS 2022Kexin Zhang, Elmira Deldari, Zhicong Lu, Yaxing Yao, and Yuhang ZhaoIn social Virtual Reality (VR), users are embodied in avatars and interact with other users in a face-to-face manner using avatars as the medium. With the advent of social VR, people with disabilities (PWD) have shown an increasing presence on this new social media. With their unique disability identity, it is not clear how PWD perceive their avatars and whether and how they prefer to disclose their disability when presenting themselves in social VR. We fill this gap by exploring PWD’s avatar perception and disability disclosure preferences in social VR. Our study involved two steps. We first conducted a systematic review of fifteen popular social VR applications to evaluate their avatar diversity and accessibility support. We then conducted an in-depth interview study with 19 participants who had different disabilities to understand their avatar experiences. Our research revealed a number of disability disclosure preferences and strategies adopted by PWD (e.g., reflect selective disabilities, present a capable self). We also identified several challenges faced by PWD during their avatar customization process. We discuss the design implications to promote avatar accessibility and diversity for future social VR platforms.

-

"I was Confused by It; It was Confused by Me:" Exploring the Experiences of People with Visual Impairments around Mobile Service RobotsCSCW 2022 Diversity and Inclusion RecognitionPrajna Bhat and Yuhang Zhao

"I was Confused by It; It was Confused by Me:" Exploring the Experiences of People with Visual Impairments around Mobile Service RobotsCSCW 2022 Diversity and Inclusion RecognitionPrajna Bhat and Yuhang ZhaoDiversity and Inclusion Recognition

Mobile service robots have become increasingly ubiquitous. However, these robots can pose potential accessibility issues and safety concerns to people with visual impairments (PVI). We sought to explore the challenges faced by PVI around mainstream mobile service robots and identify their needs. Seventeen PVI were interviewed about their experiences with three emerging robots: vacuum robots, delivery robots, and drones. We comprehensively investigated PVI’s robot experiences by considering their different roles around robots—direct users and bystanders. Our study highlighted participants’ challenges and concerns about the accessibility, safety, and privacy issues around mobile service robots. We found that the lack of accessible feedback made it difficult for PVI to precisely control, locate, and track the status of the robots. Moreover, encountering mobile robots as bystanders confused and even scared the participants, presenting safety and privacy barriers. We further distilled design considerations for more accessible and safe robots for PVI.

-

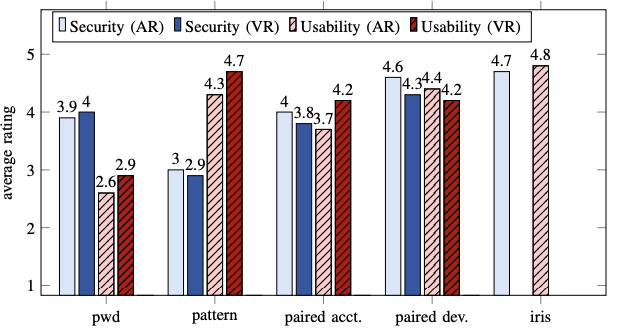

Sok: Authentication in Augmented and Virtual RealityIEEE S&P 2022Sophie Stephenson, Bijeeta Pal, Stephen Fan, Earlence Fernandes, Yuhang Zhao, and Rahul Chatterjee

Sok: Authentication in Augmented and Virtual RealityIEEE S&P 2022Sophie Stephenson, Bijeeta Pal, Stephen Fan, Earlence Fernandes, Yuhang Zhao, and Rahul ChatterjeeAugmented reality (AR) and virtual reality (VR) devices are emerging as prominent contenders to today’s personal computers. As personal devices, users will use AR and VR to store and access their sensitive data and thus will need secure and usable ways to authenticate. In this paper, we evaluate the state-of-the-art of authentication mechanisms for AR/VR devices by systematizing research efforts and practical deployments. By studying users’ experiences with authentication on AR and VR, we gain insight into the important properties needed for authentication on these devices. We then use these properties to perform a comprehensive evaluation of AR/VR authentication mechanisms both proposed in literature and used in practice. In all, we synthesize a coherent picture of the current state of authentication mechanisms for AR/VR devices. We draw on our findings to provide concrete research directions and advice on implementing and evaluating future authentication methods.

-

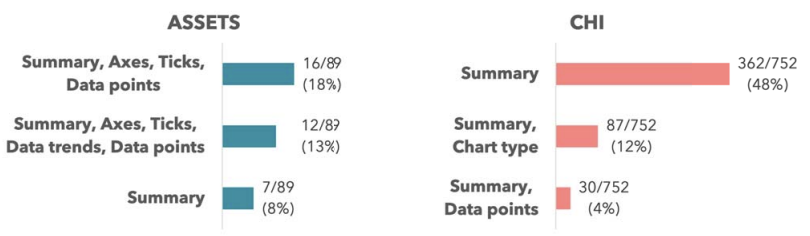

Communicating Visualizations without Visuals: Investigation of Visualization Alternative Text for People with Visual ImpairmentsIEEE VIS 2021 Best Paper Honorable MentionCrescentia Jung, Shubham Mehta, Atharva Kulkarni, Yuhang Zhao, and Yea-Seul Kim

Communicating Visualizations without Visuals: Investigation of Visualization Alternative Text for People with Visual ImpairmentsIEEE VIS 2021 Best Paper Honorable MentionCrescentia Jung, Shubham Mehta, Atharva Kulkarni, Yuhang Zhao, and Yea-Seul KimBest Paper Honorable Mention

Alternative text is critical in communicating graphics to people who are blind or have low vision. Especially for graphics that contain rich information, such as visualizations, poorly written or an absence of alternative texts can worsen the information access inequality for people with visual impairments. In this work, we consolidate existing guidelines and survey current practices to inspect to what extent current practices and recommendations are aligned. Then, to gain more insight into what people want in visualization alternative texts, we interviewed 22 people with visual impairments regarding their experience with visualizations and their information needs in alternative texts. The study findings suggest that participants actively try to construct an image of visualizations in their head while listening to alternative texts and wish to carry out visualization tasks (e.g., retrieve specific values) as sighted viewers would. The study also provides ample support for the need to reference the underlying data instead of visual elements to reduce users’ cognitive burden. Informed by the study, we provide a set of recommendations to compose an informative alternative text.

-

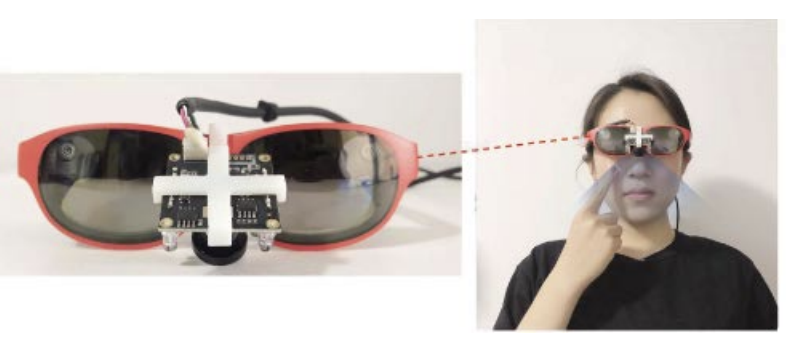

Facesight: Enabling Hand-to-Face Gesture Interaction on AR Glasses with a Downward-facing Camera VisionCHI 2021Yueting Weng, Chun Yu, Yingtian Shi, Yuhang Zhao, Yukang Yan, and Yuanchun Shi

Facesight: Enabling Hand-to-Face Gesture Interaction on AR Glasses with a Downward-facing Camera VisionCHI 2021Yueting Weng, Chun Yu, Yingtian Shi, Yuhang Zhao, Yukang Yan, and Yuanchun ShiWe present FaceSight, a computer vision-based hand-to-face gesture sensing technique for AR glasses. FaceSight fixes an infrared camera onto the bridge of AR glasses to provide extra sensing capability of the lower face and hand behaviors. We obtained 21 hand-to-face gestures and demonstrated the potential interaction benefits through five AR applications. We designed and implemented an algorithm pipeline that segments facial regions, detects hand-face contact (f1 score: 98.36%), and trains convolutional neural network (CNN) models to classify the hand-to-face gestures. The input features include gesture recognition, nose deformation estimation, and continuous fingertip movement. Our algorithm achieves classification accuracy of all gestures at 83.06%, proved by the data of 10 users. Due to the compact form factor and rich gestures, we recognize FaceSight as a practical solution to augment input capability of AR glasses in the future.

-

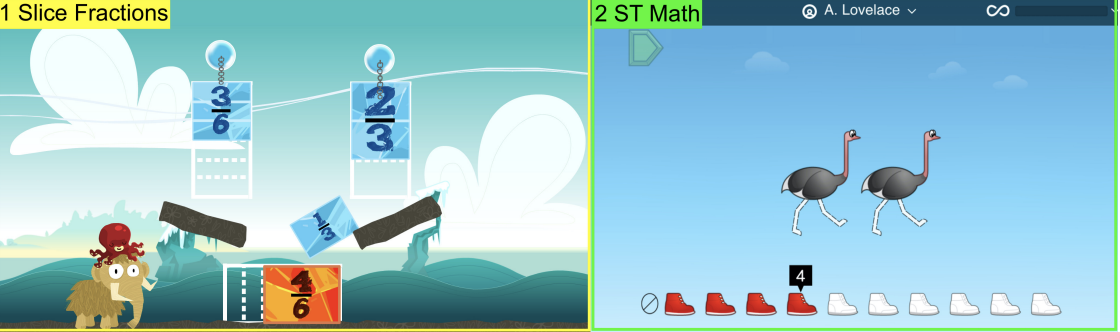

Teacher Views of Math E-learning Tools for Students with Specific Learning DisabilitiesASSETS 2020Zikai Alex Wen, Erica Silverstein, Yuhang Zhao, Anjelika Lynne Amog, Katherine Garnett, and Shiri Azenkot

Teacher Views of Math E-learning Tools for Students with Specific Learning DisabilitiesASSETS 2020Zikai Alex Wen, Erica Silverstein, Yuhang Zhao, Anjelika Lynne Amog, Katherine Garnett, and Shiri AzenkotMany students with specific learning disabilities (SLDs) have difficulty learning math. To succeed in math, they need to receive personalized support from teachers. Recently, math e-learning tools that provide personalized math skills training have gained popularity. However, we know little about how well these tools help teachers personalize instruction for students with SLDs. To answer this question, we conducted semi-structured interviews with 12 teachers who taught students with SLDs in grades five to eight. We found that participants used math e-learning tools that were not designed specifically for students with SLDs. Participants had difficulty using these tools because of text-intensive user interfaces, insufficient feedback about student performance, inability to adjust difficulty levels, and problems with setup and maintenance. Participants also needed assistive technology for their students, but they had challenges in getting and using it. From our findings, we distilled design implications to help shape the design of more inclusive and effective e-learning tools.

-

The Effectiveness of Visual and Audio Wayfinding Guidance on Smartglasses for People with Low VisionCHI 2020Yuhang Zhao, Elizabeth Kupferstein, Hathaitorn Rojnirun, Leah Findlater, and Shiri Azenkot

The Effectiveness of Visual and Audio Wayfinding Guidance on Smartglasses for People with Low VisionCHI 2020Yuhang Zhao, Elizabeth Kupferstein, Hathaitorn Rojnirun, Leah Findlater, and Shiri AzenkotWayfinding is a critical but challenging task for people who have low vision, a visual impairment that falls short of blindness. Prior wayfinding systems for people with visual impairments focused on blind people, providing only audio and tactile feedback. Since people with low vision use their remaining vision, we sought to determine how audio feedback compares to visual feedback in a wayfinding task. We developed visual and audio wayfinding guidance on smartglasses based on de facto standard approaches for blind and sighted people and conducted a study with 16 low vision participants. We found that participants made fewer mistakes and experienced lower cognitive load with visual feedback. Moreover, participants with a full field of view completed the wayfinding tasks faster when using visual feedback. However, many participants preferred audio feedback because of its shorter learning curve. We propose design guidelines for wayfinding systems for low vision.

-

Molder: an Accessible Design Tool for Tactile MapsCHI 2020Lei Shi, Yuhang Zhao, Ricardo Gonzalez Penuela, Elizabeth Kupferstein, and Shiri Azenkot

Molder: an Accessible Design Tool for Tactile MapsCHI 2020Lei Shi, Yuhang Zhao, Ricardo Gonzalez Penuela, Elizabeth Kupferstein, and Shiri AzenkotTactile materials are powerful teaching aids for students with visual impairments (VIs). To design these materials, designers must use modeling applications, which have high learning curves and rely on visual feedback. Today, Orientation and Mobility (O&M) specialists and teachers are often responsible for designing these materials. However, most of them do not have professional modeling skills, and many are visually impaired themselves. To address this issue, we designed Molder, an accessible design tool for interactive tactile maps, an important type of tactile materials that can help students learn O&M skills. A designer uses Molder to design a map using tangible input techniques, and Molder provides auditory feedback and high-contrast visual feedback. We evaluated Molder with 12 participants (8 with VIs, 4 sighted). After a 30-minute training session, the participants were all able to use Molder to design maps with customized tactile and interactive information.

- SeeingVR: A Set of Tools to Make Virtual Reality More Accessible to People with Low VisionCHI 2019Yuhang Zhao, Edward Cutrell, Christian Holz, Meredith Ringel Morris, Eyal Ofek, and Andrew D Wilson

Current virtual reality applications do not support people who have low vision, i.e., vision loss that falls short of complete blindness but is not correctable by glasses. We present SeeingVR, a set of 14 tools that enhance a VR application for people with low vision by providing visual and audio augmentations. A user can select, adjust, and combine different tools based on their preferences. Nine of our tools modify an existing VR application post hoc via a plugin without developer effort. The rest require simple inputs from developers using a Unity toolkit we created that allows integrating all 14 of our low vision support tools during development. Our evaluation with 11 participants with low vision showed that SeeingVR enabled users to better enjoy VR and complete tasks more quickly and accurately. Developers also found our Unity toolkit easy and convenient to use.

- Designing AR Visualizations to Facilitate Stair Navigation for People with Low VisionUIST 2019Yuhang Zhao, Elizabeth Kupferstein, Brenda Veronica Castro, Steven Feiner, and Shiri Azenkot

Navigating stairs is a dangerous mobility challenge for people with low vision, who have a visual impairment that falls short of blindness. Prior research contributed systems for stair navigation that provide audio or tactile feedback, but people with low vision have usable vision and don’t typically use nonvisual aids. We conducted the first exploration of augmented reality (AR) visualizations to facilitate stair navigation for people with low vision. We designed visualizations for a projection-based AR platform and smartglasses, considering the different characteristics of these platforms. For projection-based AR, we designed visual highlights that are projected directly on the stairs. In contrast, for smartglasses that have a limited vertical field of view, we designed visualizations that indicate the user’s position on the stairs, without directly augmenting the stairs themselves. We evaluated our visualizations on each platform with 12 people with low vision, finding that the visualizations for projection-based AR increased participants’ walking speed. Our designs on both platforms largely increased participants’ self-reported psychological security.

-

Designing and Evaluating a Customizable Head-mounted Vision Enhancement System for People with Low VisionTACCESS 2019Yuhang Zhao, Sarit Szpiro, Lei Shi, and Shiri Azenkot

Designing and Evaluating a Customizable Head-mounted Vision Enhancement System for People with Low VisionTACCESS 2019Yuhang Zhao, Sarit Szpiro, Lei Shi, and Shiri AzenkotRecent advances in head-mounted displays (HMDs) present an opportunity to design vision enhancement systems for people with low vision, whose vision cannot be corrected with glasses or contact lenses. We aim to understand whether and how HMDs can aid low vision people in their daily lives. We designed ForeSee, an HMD prototype that enhances people’s view of the world with image processing techniques such as magnification and edge enhancement. We evaluated these vision enhancements with 20 low vision participants who performed four viewing tasks: image recognition and reading tasks from near- and far-distance. We found that participants needed to combine and adjust the enhancements to comfortably complete the viewing tasks. We then designed two input modes to enable fast and easy customization: speech commands and smartwatch-based gestures. While speech commands are commonly used for eyes-free input, our novel set of onscreen gestures on a smartwatch can be used in scenarios where speech is not appropriate or desired. We evaluated both input modes with 11 low vision participants and found that both modes effectively enabled low vision users to customize their visual experience on the HMD. We distill design insights for HMD applications for low vision and spur new research directions.

-

"It Looks Beautiful but Scary": How Low Vision People Navigate Stairs and Other Surface Level ChangesASSETS 2018 Best Paper Honorable MentionYuhang Zhao, Elizabeth Kupferstein, Doron Tal, and Shiri Azenkot

"It Looks Beautiful but Scary": How Low Vision People Navigate Stairs and Other Surface Level ChangesASSETS 2018 Best Paper Honorable MentionYuhang Zhao, Elizabeth Kupferstein, Doron Tal, and Shiri AzenkotBest Paper Honorable Mention

Walking in environments with stairs and curbs is potentially dangerous for people with low vision. We sought to understand what challenges low vision people face and what strategies and tools they use when navigating such surface level changes. Using contextual inquiry, we interviewed and observed 14 low vision participants as they completed navigation tasks in two buildings and through two city blocks. The tasks involved walking in- and outdoors, across four staircases and two city blocks. We found that surface level changes were a source of uncertainty and even fear for all participants. Besides the white cane that many participants did not want to use, participants did not use technology in the study. Participants mostly used their vision, which was exhausting and sometimes deceptive. Our findings highlight the need for systems that support surface level changes and other depth-perception tasks; they should consider low vision people’s distinct experiences from blind people, their sensitivity to different lighting conditions, and leverage visual enhancements.

- Enabling People with Visual Impairments to Navigate Virtual Reality with a Haptic and Auditory Cane SimulationCHI 2018Yuhang Zhao, Cynthia L Bennett, Hrvoje Benko, Edward Cutrell, Christian Holz, Meredith Ringel Morris, and Mike Sinclair

Traditional virtual reality (VR) mainly focuses on visual feedback, which is not accessible for people with visual impairments. We created Canetroller, a haptic cane controller that simulates white cane interactions, enabling people with visual impairments to navigate a virtual environment by transferring their cane skills into the virtual world. Canetroller provides three types of feedback: (1) physical resistance generated by a wearable programmable brake mechanism that physically impedes the controller when the virtual cane comes in contact with a virtual object; (2) vibrotactile feedback that simulates the vibrations when a cane hits an object or touches and drags across various surfaces; and (3) spatial 3D auditory feedback simulating the sound of real-world cane interactions. We designed indoor and outdoor VR scenes to evaluate the effectiveness of our controller. Our study showed that Canetroller was a promising tool that enabled visually impaired participants to navigate different virtual spaces. We discuss potential applications supported by Canetroller ranging from entertainment to mobility training.

-

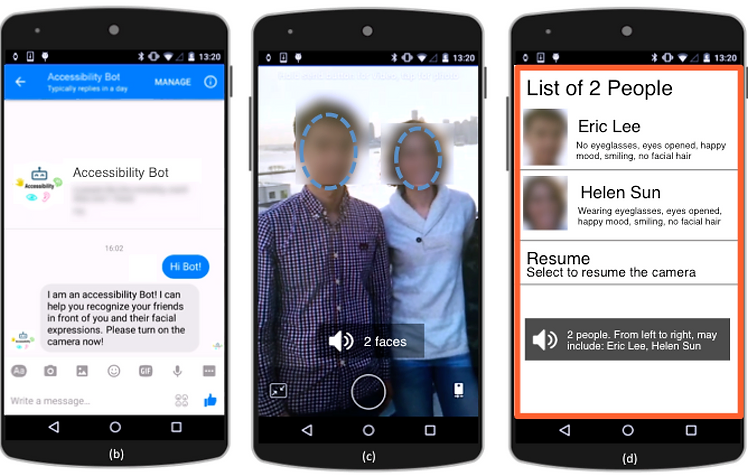

A Face Recognition Application for People with Visual Impairments: Understanding Use beyond the LabCHI 2018Yuhang Zhao, Shaomei Wu, Lindsay Reynolds, and Shiri Azenkot

A Face Recognition Application for People with Visual Impairments: Understanding Use beyond the LabCHI 2018Yuhang Zhao, Shaomei Wu, Lindsay Reynolds, and Shiri AzenkotRecognizing others is a major challenge for people with visual impairments (VIPs) and can hinder engagement in social activities. We present Accessibility Bot, a research prototype bot on Facebook Messenger, that leverages state-of-the-art computer vision and a user’s friends’ tagged photos on Facebook to help people with visual impairments recognize their friends. Accessibility Bot provides users information about identity and facial expressions and attributes of friends captured by their phone’s camera. To guide our design, we interviewed eight VIPs to understand their challenges and needs in social activities. After designing and implementing the bot, we conducted a diary study with six VIPs to study its use in everyday life. While most participants found the Bot helpful, their experience was undermined by perceived low recognition accuracy, difficulty aiming a camera, and lack of knowledge about the phone’s status. We discuss these real-world challenges, identify suitable use cases for Accessibility Bot, and distill design implications for future face recognition applications.

-

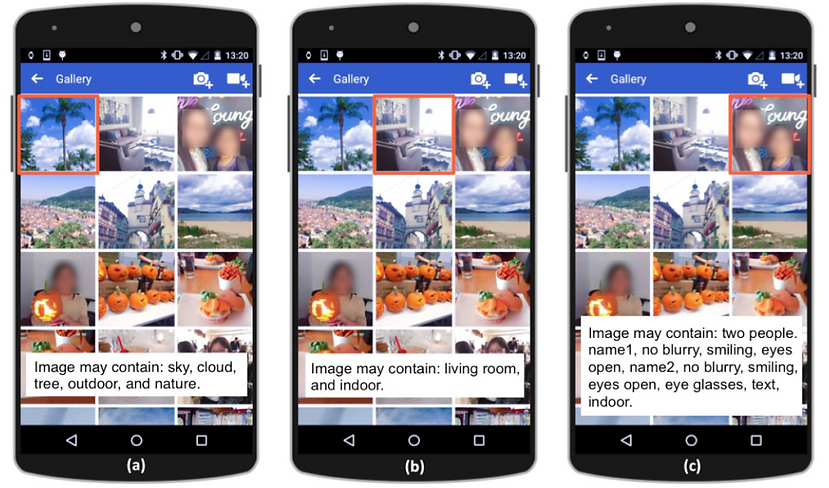

The Effect of Computer-generated Descriptions on Photo-sharing Experiences of People with Visual ImpairmentsCSCW 2018Yuhang Zhao, Shaomei Wu, Lindsay Reynolds, and Shiri Azenkot

The Effect of Computer-generated Descriptions on Photo-sharing Experiences of People with Visual ImpairmentsCSCW 2018Yuhang Zhao, Shaomei Wu, Lindsay Reynolds, and Shiri AzenkotLike sighted people, visually impaired people want to share photographs on social networking services, but find it difficult to identify and select photos from their albums. We aimed to address this problem by incorporating state-of-the-art computer-generated descriptions into Facebook’s photo-sharing feature. We interviewed 12 visually impaired participants to understand their photo-sharing experiences and designed a photo description feature for the Facebook mobile application. We evaluated this feature with six participants in a seven-day diary study. We found that participants used the descriptions to recall and organize their photos, but they hesitated to upload photos without a sighted person’s input. In addition to basic information about photo content, participants wanted to know more details about salient objects and people, and whether the photos reflected their personal aesthetic. We discuss these findings from the lens of self-disclosure and self-presentation theories and propose new computer vision research directions that will better support visual content sharing by visually impaired people.

-

Designing Interactions for 3D Printed Models with Blind PeopleASSETS 2017Lei Shi, Yuhang Zhao, and Shiri Azenkot

Designing Interactions for 3D Printed Models with Blind PeopleASSETS 2017Lei Shi, Yuhang Zhao, and Shiri AzenkotThree-dimensional printed models have the potential to serve as powerful accessibility tools for blind people. Recently, researchers have developed methods to further enhance 3D prints by making them interactive: when a user touches a certain area in the model, the model speaks a description of the area. However, these interactive models were limited in terms of their functionalities and interaction techniques. We conducted a two-section study with 12 legally blind participants to fill in the gap between existing interactive model technologies and end users’ needs, and explore design opportunities. In the first section of the study, we observed participants’ behavior as they explored and identified models and their components. In the second section, we elicited user-defined input techniques that would trigger various functions from an interactive model. We identified five exploration activities (e.g., comparing tactile elements), four hand postures (e.g., using one hand to hold a model in the air), and eight gestures (e.g., using index finger to strike on a model) from the participants’ exploration processes and aggregate their elicited input techniques. We derived key insights from our findings including: (1) design implications for I3M technologies, and (2) specific designs for interactions and functionalities for I3Ms.

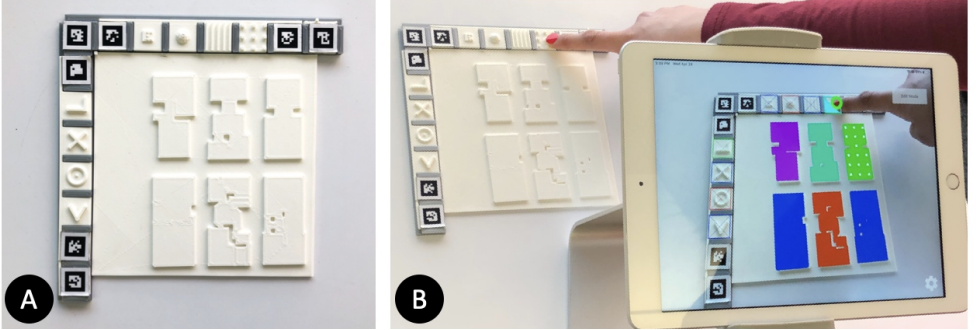

- Markit and Talkit: a Low-barrier Toolkit to Augment 3D Printed Models with Audio AnnotationsUIST 2017Lei Shi, Yuhang Zhao, and Shiri Azenkot

As three-dimensional printers become more available, 3D printed models can serve as important learning materials, especially for blind people who perceive the models tactilely. Such models can be much more powerful when augmented with audio annotations that describe the model and their elements. We present Markit and Talkit, a low-barrier toolkit for creating and interacting with 3D models with audio annotations. Makers (e.g., hobbyists, teachers, and friends of blind people) can use Markit to mark model elements and associate then with text annotations. A blind user can then print the augmented model, launch the Talkit application, and access the annotations by touching the model and following Talkit’s verbal cues. Talkit uses an RGB camera and a microphone to sense users’ inputs so it can run on a variety of devices. We evaluated Markit with eight sighted "makers" and Talkit with eight blind people. On average, non-experts added two annotations to a model in 275 seconds (SD=70) with Markit. Meanwhile, with Talkit, blind people found a specified annotation on a model in an average of 7 seconds (SD=8).

-

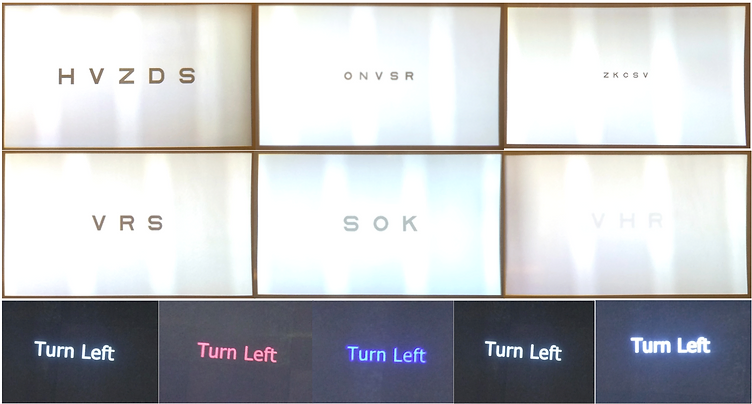

Understanding Low Vision People’s Visual Perception on Commercial Augmented Reality GlassesCHI 2017Yuhang Zhao, Michele Hu, Shafeka Hashash, and Shiri Azenkot

Understanding Low Vision People’s Visual Perception on Commercial Augmented Reality GlassesCHI 2017Yuhang Zhao, Michele Hu, Shafeka Hashash, and Shiri AzenkotPeople with low vision have a visual impairment that affects their ability to perform daily activities. Unlike blind people, low vision people have functional vision and can potentially benefit from smart glasses that provide dynamic, always-available visual information. We sought to determine what low vision people could see on mainstream commercial augmented reality (AR) glasses, despite their visual limitations and the device’s constraints. We conducted a study with 20 low vision participants and 18 sighted controls, asking them to identify virtual shapes and text in different sizes, colors, and thicknesses. We also evaluated their ability to see the virtual elements while walking. We found that low vision participants were able to identify basic shapes and read short phrases on the glasses while sitting and walking. Identifying virtual elements had a similar effect on low vision and sighted people’s walking speed, slowing it down slightly. Our study yielded preliminary evidence that mainstream AR glasses can be powerful accessibility tools. We derive guidelines for presenting visual output for low vision people and discuss opportunities for accessibility applications on this platform.

-

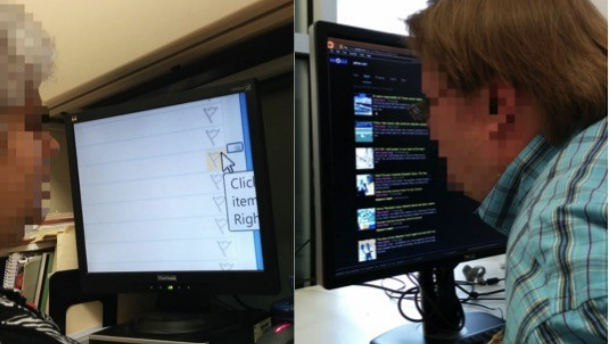

How People with Low Vision Access Computing Devices: Understanding Challenges and OpportunitiesASSETS 2016 Best Paper Honorable MentionSarit Felicia Anais Szpiro, Shafeka Hashash, Yuhang Zhao, and Shiri Azenkot

How People with Low Vision Access Computing Devices: Understanding Challenges and OpportunitiesASSETS 2016 Best Paper Honorable MentionSarit Felicia Anais Szpiro, Shafeka Hashash, Yuhang Zhao, and Shiri AzenkotBest Paper Honorable Mention

Low vision is a pervasive condition in which people have difficulty seeing even with corrective lenses. People with low vision frequently use mainstream computing devices, however how they use their devices to access information and whether digital low vision accessibility tools provide adequate support remains understudied. We addressed these questions with a contextual inquiry study. We observed 11 low vision participants using their smartphones, tablets, and computers when performing simple tasks such as reading email. We found that participants preferred accessing information visually than aurally (e.g., screen readers), and juggled a variety of accessibility tools. However, accessibility tools did not provide them with appropriate support. Moreover, participants had to constantly perform multiple gestures in order to see content comfortably. These challenges made participants inefficient-they were slow and often made mistakes; even tech savvy participants felt frustrated and not in control. Our findings reveal the unique needs of low vision people, which differ from those of people with no vision and design opportunities for improving low vision accessibility tools.

-

Finding a Store, Searching for a Product: a Study of Daily Challenges of Low Vision PeopleUbiComp 2016Sarit Szpiro, Yuhang Zhao, and Shiri Azenkot

Finding a Store, Searching for a Product: a Study of Daily Challenges of Low Vision PeopleUbiComp 2016Sarit Szpiro, Yuhang Zhao, and Shiri AzenkotVisual impairments encompass a range of visual abilities. People with low vision have functional vision and thus their experiences are likely to be different from people with no vision. We sought to answer two research questions: (1) what challenges do low vision people face when performing daily activities and (2) what aids (high- and low-tech) do low vision people use to alleviate these challenges? Our goal was to reveal gaps in current technologies that can be addressed by the UbiComp community. Using contextual inquiry, we observed 11 low vision people perform a wayfinding and shopping task in an unfamiliar environment. The task involved wayfinding and searching and purchasing a product. We found that, although there are low vision aids on the market, participants mostly used their smartphones, despite interface accessibility challenges. While smartphones helped them outdoors, participants were overwhelmed and frustrated when shopping in a store. We discuss the inadequacies of existing aids and highlight the need for systems that enhance visual information, rather than convert it to audio or tactile.

- CueSee: Exploring Visual Cues for People with Low Vision to Facilitate a Visual Search TaskUbiComp 2016Yuhang Zhao, Sarit Szpiro, Jonathan Knighten, and Shiri Azenkot

Visual search is a major challenge for low vision people. Conventional vision enhancements like magnification help low vision people see more details, but cannot indicate the location of a target in a visual search task. In this paper, we explore visual cues—a new approach to facilitate visual search tasks for low vision people. We focus on product search and present CueSee, an augmented reality application on a head-mounted display (HMD) that facilitates product search by recognizing the product automatically and using visual cues to direct the user’s attention to the product. We designed five visual cues that users can combine to suit their visual condition. We evaluated the visual cues with 12 low vision participants and found that participants preferred using our cues to conventional enhancements for product search. We also found that CueSee outperformed participants’ best-corrected vision in both time and accuracy.

-

Foresee: A Customizable Head-mounted Vision Enhancement System for People with Low VisionASSETS 2015Yuhang Zhao, Sarit Szpiro, and Shiri Azenkot

Foresee: A Customizable Head-mounted Vision Enhancement System for People with Low VisionASSETS 2015Yuhang Zhao, Sarit Szpiro, and Shiri AzenkotMost low vision people have functional vision and would likely prefer to use their vision to access information. Recently, there have been advances in head-mounted displays, cameras, and image processing technology that create opportunities to improve the visual experience for low vision people. In this paper, we present ForeSee, a head-mounted vision enhancement system with five enhancement methods: Magnification, Contrast Enhancement, Edge Enhancement, Black/White Reversal, and Text Extraction; in two display modes: Full and Window. ForeSee enables users to customize their visual experience by selecting, adjusting, and combining different enhancement methods and display modes in real time. We evaluated ForeSee by conducting a study with 19 low vision participants who performed near- and far-distance viewing tasks. We found that participants had different preferences for enhancement methods and display modes when performing different tasks. The Magnification Enhancement Method and the Window Display Mode were popular choices, but most participants felt that combining several methods produced the best results. The ability to customize the system was key to enabling people with a variety of different vision abilities to improve their visual experience.

- QOOK: Enhancing Information Revisitation for Active Reading with a Paper BookTEI 2014Yuhang Zhao, Yongqiang Qin, Yang Liu, Siqi Liu, Taoshuai Zhang, and Yuanchun Shi

Revisiting information on previously accessed pages is a common activity during active reading. Both physical and digital books have their own benefits in supporting such activity according to their manipulation natures. In this paper, we introduce QOOK, a paper-book based interactive reading system, which integrates the advanced technology of digital books with the affordances of physical books to facilitate people’s information revisiting process. The design goals of QOOK are derived from the literature survey and our field study on physical and digital books respectively. QOOK allows page flipping just like on a real book and enables people to use electronic functions such as keyword searching, highlighting and bookmarking. A user study is conducted and the study results demonstrate that QOOK brings faster information revisiting and better reading experience to readers.

- FOCUS: Enhancing Children’s Engagement in Reading by Using Contextual BCI Training SessionsCHI 2014Jin Huang, Chun Yu, Yuntao Wang, Yuhang Zhao, Siqi Liu, Chou Mo, Jie Liu, Lie Zhang, and Yuanchun Shi

Reading is an important aspect of a child’s development. Reading outcome is heavily dependent on the level of engagement while reading. In this paper, we present FOCUS, an EEG-augmented reading system which monitors a child’s engagement level in real time, and provides contextual BCI training sessions to improve a child’s reading engagement. A laboratory experiment was conducted to assess the validity of the system. Results showed that FOCUS could significantly improve engagement in terms of both EEG-based measurement and teachers’ subjective measure on the reading outcome.

- PicoPet: " Real World" Digital Pet on a Handheld ProjectorUIST 2011 AdjunctYuhang Zhao, Chao Xue, Xiang Cao, and Yuanchun Shi

We created PicoPet, a digital pet game based on mobile handheld projectors. The player can project the pet into physical environments, and the pet behaves and evolves differently according to the physical surroundings. PicoPet creates a new form of gaming experience that is directly blended into the physical world, thus could become incorporated into the player’s daily life as well as reflecting their lifestyle. Multiple pets projected by multiple players can also interact with each other, potentially triggering social interactions between players. In this paper, we present the design and implementation of PicoPet, as well as directions for future explorations.

- VRSight: An AI-driven Scene Description System to Improve Virtual Reality Accessibility for Blind PeopleUIST 2025

-

Inclusive Avatar Guidelines for People with Disabilities: Supporting Disability Representation in Social Virtual RealityCHI 2025

Inclusive Avatar Guidelines for People with Disabilities: Supporting Disability Representation in Social Virtual RealityCHI 2025 -

Comparing Vibrotactile and Skin-Stretch Haptic Feedback for Conveying Spatial Information of Virtual Objects to Blind VR UsersIEEE VR 2025

Comparing Vibrotactile and Skin-Stretch Haptic Feedback for Conveying Spatial Information of Virtual Objects to Blind VR UsersIEEE VR 2025 - CookAR: Affordance Augmentations in Wearable AR to Support Kitchen Tool Interactions for People with Low VisionUIST 2024 Belonging and Inclusion Best Paper Award

-

A Diary Study in Social Virtual Reality: Impact of Avatars with Disability Signifiers on the Social Experiences of People with DisabilitiesASSETS 2023

A Diary Study in Social Virtual Reality: Impact of Avatars with Disability Signifiers on the Social Experiences of People with DisabilitiesASSETS 2023 - SeeingVR: A Set of Tools to Make Virtual Reality More Accessible to People with Low VisionCHI 2019